Scorum user @betman wrote a post on scorum about his "Christmas dream" about decentralized bet exchange where users can put events from any sport they want and bet against each other. At first sight I was puzzled how users can enter what they want, who will check if event exist or result if game has been played, what if user write club name on wrong way etc.. Betman replied that user input and results can be checked against web site betexplorer. Main obstacle in this project is how to get those valuable information from that source and fee they (or some other source) might ask for that.

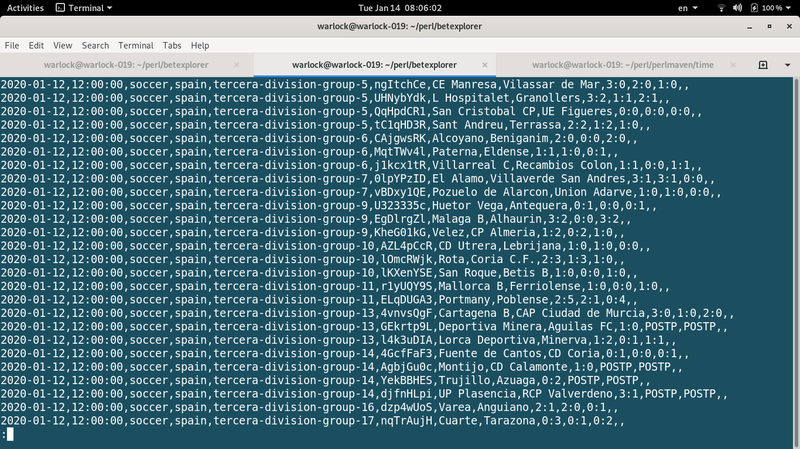

Betexplorer's result and fixture list looks like this:

I'm not to good perl (Practical Extraction and Reporting Language) programmer, but one with some experience and while I searched for some free lance jobs online I found out job to "scrap weather prediction website" and get useful information from it. I decided to practice a bit and try to scrap this web page and to get useful information like date and time of game, country, league name, host and away club names and final result of game if it ended already. Task is simple to automatically get this web page, parse it and on intelligent way extract information I need. Analyzing web page I noticed 4 possibilites and 4 html tags I'm interested in:

1 Game is already ended in which case I have result of full time and half time and penalties result (if game ended in that stage).

2 Game is postponed and has key word POSTP on place for result.

3) Game is in progress

4) Game is in future

I quickly identified html tags I want to parse and I managed to get useful information from web site in following format:

Format is date,time (can be joined datetime like in Mysql), sport, country, competition name, betexplorer's code which points to link for that game, home side, away side, full time, first half, second half and penalties result (if exist). Results can be empty if game is not played yet or POSTP if game has been postponed.

It's not long road from this point to insert it in mysql database. It's not whitest method to get info, but when you don't have money or they ask for to much we can turn on toward grey zone. I don't know what is your opinion about grey (not 100% legal) zones, but I am living and working in such zone for years in telephony, phone marketing and call center business. If scorum team is in fear from some legal action from betexplorer I can easily setup server somewhere and do all that grey business and fill all info in mysql database against which new decentralized bet exchange can check events and results and stay in full white zone saying we have agreement with Mr. that and that to gather information from his database how he obtain information isn't our business. I can always say I'm entering all data manually (which can also be the case for real with current amount of free time I have).

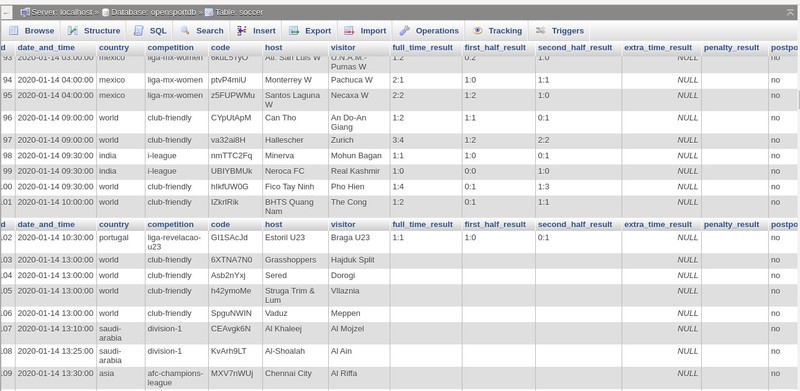

UPDATE here is how it could look in db:

Scenario: one VPS loaned on one name (5 USD monthly) is executing cron job with this perl script gathering info(with Epxress VPN (13 USD monthly) to change IP to be under radar for requests changing IP every day or every hour) and converting html data into txt file format we want. Other VPS loaned on other name is getting it and inserting it in mysql and new bet exchange check games and results against that mysql database.

Perl can be great solution for such tasks to scrap some web page and to parse text files and strings. It has powerful regular expressions and tools for that. It has been build for text processing and reporting.

Now with results and fixtures easily possible to get there is no obstacle on the road toward building such decentralized bet exchange which would use SCR coin for making bets making bigger demand for coin and possibly sending it to the moon.

"It's small step for man, but big step for mankind."

Thanks for reading.

Comments